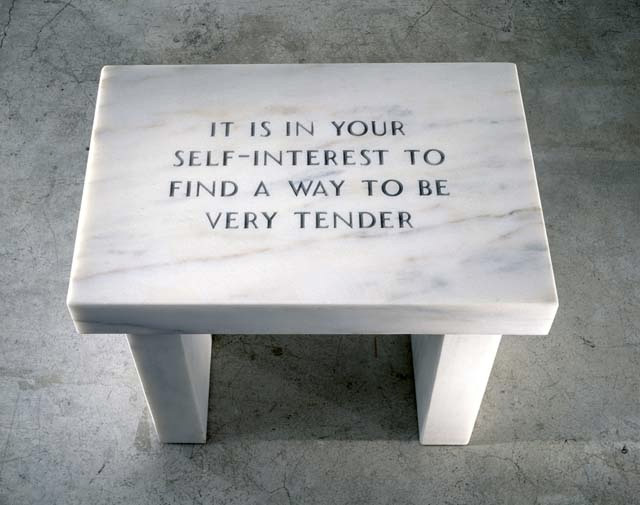

Artist Jenny Holzer agrees.

Many people appreciate love, art, and even daydreaming, without realizing that they inherently make you stronger. While most people only think of evolution as a competitive tooth and nail fight to the top, in fact cooperation is also a strategy that evolved in this environment. People who share and cooperate with each other simply outcompete those who don’t.

Many people appreciate love, art, and even daydreaming, without realizing that they inherently make you stronger. While most people only think of evolution as a competitive tooth and nail fight to the top, in fact cooperation is also a strategy that evolved in this environment. People who share and cooperate with each other simply outcompete those who don’t.

This past summer, Scott Alexander posted a piece called Meditations on Moloch on his excellent blog, Star Slate Codex. I like this piece, since it is a strange mashup of ideas from Ginsberg’s Howl, AI disaster scenarios from Bostrum’s Superintelligence, and dark age ideas about how society should be structured from one of these new conservative types. This piece has really captured the imagination of the rationalist community and many people I know seem to fully agree with his viewpoint. I happen to disagree with some of the premises, so I want to clarify my thoughts on the matter.

… Scott Alexander posted a piece called Meditations on Moloch on his excellent blog, Star Slate Codex. I happen to disagree with some of the premises, so I want to clarify my thoughts on the matter.

It’s very hard to sum up Alexander’s post, as you might imagine from the disparate sources he is trying to bring together. But one key focus is on “multipolar traps,” in which competition causes us to trade away things we value in order to optimize for one specific goal. He gives many examples of multipolar traps, all of which are problematic for various reasons, but I will try to give one example that Bay Area home buyers can relate to: the two-income trap. Alexander believes that two-income couples are driving up home prices, and that if everyone agreed to have only one earner per household, home prices would naturally drop. Here is how Alexander sums up this particular multipolar trap:

It’s theorized that sufficiently intense competition for suburban houses in good school districts meant that people had to throw away lots of other values – time at home with their children, financial security – to optimize for house-buying-ability or else be consigned to the ghetto.

From a god’s-eye-view, if everyone agrees not to take on a second job to help win the competition for nice houses, then everyone will get exactly as nice a house as they did before, but only have to work one job. From within the system, absent a government literally willing to ban second jobs, everyone who doesn’t get one will be left behind.

So he is describing a sort of competition that helps no one, but that no one can escape from. It’s hard to think of how folks could coordinate to solve this, though a law capping real estate prices seems more realistic than banning second jobs. I actually think this is a bad example, since it seems to put the burden of driving up home prices on two-income families. In fact, I am confident that home prices demand two middle income earners because of the huge amount of capital amassed by the very rich. If all middle income earners coordinated their efforts and stopped buying homes priced above a certain level, I predict the crash in real estate prices wouldn’t last very long. The very rich would just continue snapping up real estate and drive the price right back up.

Alexander believes that two-income couples are driving up home prices, and that if everyone agreed to have only one earner per household, home prices would naturally drop. In fact, I am confident that home prices demand two middle income earners because of the huge amount of capital amassed by the very rich. If all middle income earners … stopped buying homes priced above a certain level … the very rich would just continue snapping up real estate and drive the price right back up.

Of course I’m happy to allow that multipolar traps do exist, government corruption is a persistent bane to civilization, for example. And Alexander himself points out that the universe has traits that protect humans from the destructive impact of multipolar traps. In his view, there are four reasons human values aren’t essentially destroyed by competition: 1. Excess resources, 2. Physical limitations, 3. Utility maximization, and 4. Coordination. Let me try to address each point in turn.

First, here is what Alexander says about excess resources:

This is … an age of excess carrying capacity, an age when we suddenly find ourselves with a thousand-mile head start on Malthus. As Hanson puts it, this is the dream time.

As long as resources aren’t scarce enough to lock us in a war of all against all, we can do silly non-optimal things – like art and music and philosophy and love – and not be outcompeted by merciless killing machines most of the time.

This actually seems like a misunderstanding of what resources actually are. Humans aren’t like reindeer on an island who die out after eating up all the food. Most animals are unable to manage the resources around them. (Though those other farming species ARE fascinating.) But for humans, resources are a function of raw materials and technology. Innovation is what drives increases in efficiency or even entirely new classes of resources (i.e. hunter and gatherers couldn’t make much use of petroleum). So in fact we are always widening the available resources.

I don’t believe we are in some Dream Time, when humans have a strange abundance of resources, and that we are doomed to grow our population until we reach a miserable Malthusian equilibrium. It’s well understood that human fertility goes down in advanced (rich) cultures and is more highly correlated with female education than food production. Humans seem to be pretty good about reigning in their population once they are educated and healthy enough. There is some concern about fertility cults like Mormons and Hutterites, but time isn’t kind to strange cults. Alexander himself points out that their defection rates are very high. It’s not fun taking care of so many kids, I hear.

I don’t believe we are in some Dream Time, when humans have a strange abundance of resources, and that we are doomed to grow our population until we reach a miserable Malthusian equilibrium. It’s well understood that human fertility goes down in advanced (rich) cultures and is more highly correlated with female education than food production. … Actually, innovation might just be a function of population, so the more people we have, the more innovation we will have. Innovation (and conservation) will always expand the resources available to us.

But actually, innovation might just be a function of population, so the more people we have, the more innovation we will have. Innovation (and conservation) will always expand the resources available to us. It’s foolish to think that we have learned all there is to know about manipulating matter and energy or that we will somehow stop the trend of waste reduction.

But a more interesting point to consider is that art, music, philosophy, and love actually make cultures MORE competitive. The battlefield of evolution is littered with “merciless killing machines” who have been conquered by playful, innovative humans. This has been my most surprising insight as I examined my objections to Alexander’s Moloch or Hanson’s Dream Time. In fact, it’s the cultures with art, philosophy, and love that have utterly crushed and destroyed their competitors. And the reason is complex and hard to see. Surely art and love are facilitators of cooperation, which allows groups to cohere around shared narratives and shared identities. But Hanson and Alexander are dismissing these as frivolous when they actually form the basis of supremacy. Even unstructured play is probably essential to this process of innovation that allows some cultures to dominate. Madeline Levine has a lot to say about this.

Alexander alludes to this poem by Zack Davis that imagines a future world of such stiff competition that no one can indulge in even momentary daydreaming. … But a more interesting point to consider is that art, music, philosophy, and love actually make cultures MORE competitive. … In fact it’s (these) cultures that have utterly crushed and destroyed their competitors.

Alexander alludes to this poem by Zack Davis that imagines a future world of such stiff competition that no one can indulge in even momentary daydreaming. But this is a deeply flawed understanding of innovation. Innovation is about connecting ideas, and agents that aren’t allowed to explore idea space won’t be able to innovate. So daydreaming is probably essential to creativity. In rationalist terms, Davis imagines a world in which over-fitted hill climbers will dominate, when in fact, they will all only reach local maxima, just as they always have done. They will be outcompeted by agents who can break out of the local maxima. It’s especially ironic that he uses contract drafting as an example where each side has an incentive to scour ideaspace for advantageous provisions that will still be amenable to the counterparty.

It’s strikes me as very odd to think that human values are somehow at odds with natural forces. Alexander brings up how horrible it is that a wasp would lay its eggs inside a caterpillar so that it’s young could hatch and consume from the inside out. Yet, humans lovingly spoon beef baby food into their toddler’s mouths that is derived from factory farm feedlots, which are so filthy that some cows fall over and can no longer walk, but are just bulldozed up into the meat grinder anyway. So much for tender human sensibilities. This might be a good example of where sociopaths can play a role in the population dynamics. A few psycho factory farmers can provide food for the vast squeamish billions who lack the stomach for such slaughter.

Because really, love conquers all, literally. Alexander overlooks this:

But the current rulers of the universe – call them what you want, Moloch, Gnon, Azathoth, whatever – want us dead, and with us everything we value. Art, science, love, philosophy, consciousness itself, the entire bundle. And since I’m not down with that plan, I think defeating them and taking their place is a pretty high priority.

In fact, the universe has been putting coordination problems in front of living organisms for billions of years. Multicellular organisms overcame the competition between single cells, social animals herded together to survive, and humans harnessed art, science, philosophy, and maybe even consciousness, to take cooperation to a whole other level. Far from being a strange weak anomaly, cooperation in all its forms is the tried and true strategy of evolutionary survivors. Alexander makes an allusion to this at the end of his essay when he mentions Elua:

Elua. He is the god of flowers and free love and all soft and fragile things. Of art and science and philosophy and love. Of niceness, community, and civilization. He is a god of humans.

The other gods sit on their dark thrones and think “Ha ha, a god who doesn’t even control any hell-monsters or command his worshippers to become killing machines. What a weakling! This is going to be so easy!”

But somehow Elua is still here. No one knows exactly how. And the gods who oppose Him tend to find Themselves meeting with a surprising number of unfortunate accidents.

No one is sure how Elua survives? Well, it’s clear that multicellular organisms outcompete single-celled organisms. The cooperative armies of the early civilizations defeated whatever hunter gatherer tribes they came across. The nonviolent resistance of India ended British rule, and the Civil Rights movement in America mirrored that success. Modern America has drawn millions of immigrants in part due to its arts and culture, which are known around the world. Coordination is a powerful strategy. Love, art, and culture are all tools of coordination. They are also probably tools of innovation. If creativity is drawing connections between previously unconnected concepts, then having a broader palette of concepts to choose from is an obvious advantage.

Love, art, and culture are all tools of coordination. They are also probably tools of innovation. If creativity is drawing connections between previously unconnected concepts, then having a broader palette of concepts to choose from is an obvious advantage.

Cooperation has certainly evolved, and so have human values. I am with Pinker on this. Alexander prefers to quote esoteric texts in which time flows downhill. But in Pinker’s world, we are evolving upward. Why not? Life is some strange entropy ratchet after all, why not just go with it. But if our values are tools that have evolved and are evolving, then it doesn’t make sense to lock them in place and jealously protect them.

Alexander is a good transhumanist, so he literally wants humanity to build a god-like Artificial Intelligence which will forever enshrine our noble human virtues and protect us against any alternative bad AI gods. I can’t really swallow this proposition of recursively self-improving AI. My most mundane objection is that all software has bugs and bugs are the result of unexpected input, so it seems impossible to build software that will become godlike without crashing. A deeper argument might be that intelligence is a network effect that occurs between embodied, embedded agents, which are tightly coupled to their environment, and this whole hairy mess isn’t amenable to instantaneous ascension.

But I will set aside my minor objections because this idea of fixed goals is very beloved in the rationalist scene. God forbid that anyone mess with our precious utility functions. Yet, this seems like a toy model of goals. Goals are something that animals have which are derived from our biological imperatives. For most animals, the goals are fairly fixed, but humans have a biological imperative to sociability. So our goals, and indeed our very desires, are subject to influence from others. And, as we can see from history, human goals are becoming more refined. We no longer indulge in cat burning, for example. This even happens on the scale of the individual, as young children put aside candy and toys and take up alcohol and jobs. An AI with fixed goals would be stunted in some important ways. It would be unable to refine its goals based on new understandings of the universe. It would actually be at a disadvantage to agents that are able to update their goals

If we simply extrapolate from historical evolution, we can imagine a world in which humans themselves are subsumed into a superorganism in the same way that single-celled organisms joined together to form multicellular organisms. Humans are already superintelligences compared to bacteria, and yet we rely on bacteria for our survival. The idea that a superintelligent AI would be able to use nano-replicators to take over the galaxy seems to overlook the fact that DNA has been building nano-replicators to do exactly that for billions of years. Any new contestants to the field are entering a pretty tough neighborhood.

So my big takeaway from this whole train of thought is that, surprisingly, love and cooperation are the strategies of conquerors. Daydreamers are the masters of innovation. These are the things I can put into effect in my everyday life.

So my big takeaway from this whole train of thought is that, surprisingly, love and cooperation are the strategies of conquerors. Daydreamers are the masters of innovation. These are the things I can put into effect in my everyday life. I want to take more time to play and daydream to find the solutions to my problems. I want to love more and cooperate more. I want to read more novels and indulge in more art. Because, of course, this is the only way that I will be able to crush the competition.

I agree that cooperation should have a long future, and that bodes well in the near term for human-like mental modules that support cooperation. But I disagree that recent high rates of innovation and falls in human fertility bode well for the long run. New fertility tech could allow population to expand much faster than innovation, and innovation just can’t stay high for the very long run.

Robin,

What makes you think that population and innovation are orthogonal or only weakly correlated?

I strongly suspect that innovation is positively correlated with population and some interpretations of the empirical evidence would probably bear that out. A possible causal mechanism would be that we will have a higher absolute number of innovators to explore more possible ideas and also that there will be more collaboration opportunities.

Scott

I agree that all else equal more people makes for faster innovation. But I think Malthusian equilibria are still quite possible if feasible fertility rates are very high. And in the very long run we will run out of useful innovations.

How do you explain the reduced fertility rates of rich countries? Possible fertility rates are already much higher here in the developed world, yet humans seem to have a consistent tendency to downregulate fertility in these environments.

Low fertility today is clearly out of biological equilibrium, but slow and expensive fertility tech makes adjustment to equilibrium very slow. If tech changes, as with ems, adjustment might be much faster.

I’m not sure if I understand this argument. What do you mean by biological equilibrium? And really, why would biological imperatives necessarily apply to emulated humans anyway? You seem to suggest that the only reason that women aren’t having more babies in the developed world is that it’s expensive for them. I agree that more women would probably have children if the costs were lower, but it’s not at all clear to me how that cost of reproduction could be reduced. If a mother (or father) could simple make a copy of themselves, that new copy would still require resources comparable to the parent.

By “biological” I mean “evolutionary”, not “protein-based”. If many skilled workers could be instantly created by anyone, wages would quickly fall to subsistence levels. Most people might not do it, but it would only take a few doing it to make the change.

Ah, ok. So an employer would be incentivized to provision resources to create workers? Who is responsible for sustaining the worker once created? The employer or the worker themselves?

When the wage is above the subsistence level, there is room to create more people and have jobs for them. Thus the wage drops to that level.

What sort of jobs do you imagine that humans will be able to do more cheaply than machines in the far future?

This idea that wages will drop to subsistence levels is known as the Iron Law of Wages, so all the standard critiques of this model apply. Is there any actual evidence for this model playing out in the real world? Why should we assign any predictive power to this model?

I use standard supply & demand analysis that doesn’t care who owns the items. So i’m including robots in the analysis, and not caring who owns them.

It doesn’t seem appropriate to apply the term “wages” to robots. Any value-producer that is owned should be treated as capital. Just to clarify, it seems likely that automation will actually remove ALL opportunities for humans to contribute work to the economy thus effectively reducing wages to zero. The only way most humans would survive in this world would be by forcing property owners to provide them with a stipend of some kind. Although I recall that you proposed another model where everyone would own some value producing machines effectively making everyone a capitalist, but I don’t see how that scenario could play out in real life.

I guess this takes as a premise that there is some value add that agents with property rights will still be able to provide in the far future which I am skeptical of. It seems that machines should be able to perform any job a human can do without granting it property rights. This leaves the majority of humans with a very different set of problems, mostly political.

If groups such as Mormons and Hutterites are experiencing much defection, that means the remaining population is being selected for a desire to remain in that kind of group. The Hutterite population appears to be growing in spite of those alleged defections. The Amish population seems to be doubling about every 20 years. What’s going to stop that trend?

Your discussion of goals sounds like mostly you disagreeing with LWers about terminology. The example goals you mention are mostly ones where Eliezer would reply something like “that’s a subgoal, of course we should be willing to change that to achieve our supergoal(s)”. Your reference to “new understandings of the universe” sounds similar to a harder problem where MIRI is struggling to find a good answer (see Ontological Crises in Artificial Agents, https://intelligence.org/files/OntologicalCrises.pdf). How can you tell whether there are specific goals that Eliezer wants to preserve but you may want to change?

Peter,

I don’t see how a child with a sub-goal of play and an adult with a sub-goal of work can be said to have the same ultimate goal. A child may ultimately value it’s own pleasure at the exclusion of others and an adult learns to ultimately value the well-being of others. Similarly, as societies evolve we see this expanding circle of empathy. Eliezer’s CEV doesn’t mimic the way human values have actually evolved over time. It seems that CEV could easily lead to an AI enforced Galactic Islamic Caliphate. I am not an activist, I don’t want to change human values one way or another. I am observing that they are evolving and that societies with wider circles of empathy outcompete ones with narrower circles. Gnon actually ends up crafting more and more loving values by killing off the even slightly less cooperative. This doesn’t seem like a process we should interfere with at all.

I haven’t actually looked into Hutterites or the Amish. It may not matter if their populations end up pushing us against resource limitation since higher populations seem to lead to more innovation and expansion of resources. The Iron Law of Wages model simply has no predictive power and should be discarded.

Scott

One simple ultimate goal that could produce both child play and adult work subgoals is reproductive fitness (play looks like an feature evolved for learning).

If there were some obstacle to us having a utility function which produced both child play and adult work, wouldn’t more adult workers regret having played as children?

What differences between current evolution of human values and CEV would make a Caliphate more likely under the latter?

I agree with you about the advantages of population growth, and don’t see a conflict between that and Malthusian wages.

I have to think about this goal question more but reproductive fitness seems like a poor model of an ultimate goal. It fails to predict a lot of human behavior, i.e. why don’t we see more sperm and egg donation? Why do some people not reproduce? Also, it’s generally not a conscious goal, which might matter. Human goal structures don’t seem to boil down to a single ultimate, unchanging goal.

I think that we don’t regret playing as children because we recognize that we had different priorities. Of course maybe we should regret not playing more as adults. I think this is a good point, but I have to think about it more.

I might misunderstand CEV, but I assume that a majority of Muslims would choose Islamic law even if they knew it’s ultimate outcome. AI would thus enforce it, but I predict that Islamic states will fall behind other states in resource acquisition since they largely preclude half of the workforce from generating value.

Is there any evidence at all of Malthusian wages in the real world? Why should we assign any predictive value to this theory?

I mentioned reproductive fitness as an example of what an ultimate goal would look like. I don’t have an adequate way to fully understand my actual ultimate goal, which is something along the lines of a messy program that my DNA created in an attempt to improve its reproductive fitness. Didn’t CFAR convince you that we have goals that differ from what we consciously believe our goals are?

I presume CEV would allow people who want an Islamic state to have one. That sounds different from your prior reference to a Galactic Caliphate, and I’m now unclear what you don’t like about it.

If you look at human or biological history without privileging the last century, you should see lots of evidence that Malthusian wages are fairly normal.

CFAR convinced me that system 1 has needs that system 2 isn’t always aware of that can interfere with system 2’s goals. I still can’t picture an ultimate human goal that is very predictive of actual behavior, this is why I am strongly preferring the idea that human ultimate goals are dynamic.

I might be misunderstanding coherence. I assumed that a majority of people who felt strongly enough about an idea would be able to impose that on everyone. I just went back and skimmed through the CEV paper and it’s not clear to me how coherence can even occur with strong disagreement.

It seems that individual values like a belief in Islam wouldn’t actually change if that individual just knew more or thought faster or was the person they wished they were. It may be that we just mimic the values of those around us (I should take a queue from Thiel and pick up on Girard’s memetic desire) And the values we have laying around are the ones that killed off the previous generation of values.

I’m going to have to do more research on this Malthus question. It seems that the iron law of wages failed be predictive as far back as the 1800s: http://en.wikipedia.org/wiki/Iron_law_of_wages.

Eliezer and I also can’t visualize a completely realistic human utility function. Should we conclude it’s too mysterious to ever be described? How about using the term “human nature”? Even if it changes, I say it changes in a way that sufficiently complex software could describe predictably.

If the improved coordination that you and Pinker mention doesn’t enable Muslims and atheists to cooperate, then we’ll need something more than just CEV.

I’m relying on Fogel’s The Escape from Hunger and Premature Death for when Malthusian conditions ended. But if we disagree about whether evidence from before 1800 is relevant, how much do the 1800s matter?

I overlooked this comment, but I am still interested in these topics so I will try to respond even though a month has passed:

I certainly don’t think that human goals are too mysterious to model, simply that they seem to be dynamic, social, and biological. Most of the rationalists that I discuss this with are surprised when I suggest that human goals are dynamic. A lot of the framing of utility functions in these rational circles seems to assume fairly fixed goals. There is a lot of discussion about goals, they are important to us yet I think many of us are overlooking these basic characteristics of human goal systems.

I actually predict that less cooperative cultures will be marginalized and will fail in the long run. Muslim cultures will simply need to become more cooperative in order to survive.

I don’t think there is anything special about the 1800’s. I am just questioning whether there is ANY empirical evidence of human population following malthusian models. I have spoken to several anthropologists who strongly dispute the theory that human population is a function of food production. I could kick myself for not asking for references at the time of those conversations. This seems like a very important question to figure out and I want to read up on this. Does Fogel’s book provide evidence that malthusian models are applicable to human population?

I can’t give a definite statement of what a human utility function is, but I can identify ones that I consider definitely not human (meaning there’s at least something fixed about human goals). If I saw an alleged human upload trying to turn the entire universe into 10-nanometer images of smiley faces, I’d say it was not an uploaded human mind. Would you think it plausible that such a mind was a human whose goals had changed? How does your position differ from the ideas that Pinker attacks in The Blank Slate?

Is there evidence of a society with agriculture before 1900 being far from famine without having suffered a recent population crash due to war/plague? (Fogel does show evidence that in the centuries before 1900 people were barely able to get enough calories to do survive).

There are reports of hunter-gatherer societies being able to feed themselves on a few hours of work per day. That would be evidence of non-Malthusian conditions if the population doesn’t suffer occasional crashes (rare but severe drought? war? plague?). Note that hunter-gatherers have a much lower maximum rate of reproduction.

When I model how humans actually found food as hunter gatherers, it would seems that more humans tend to find more food and then share that food. Even if we do not account for differing search strategies, just have more searchers will more reliably locate the fruit trees and large game that can’t be consumed by any one individual but can be shared…